OK, so I apologise profusely for the pun-tastic post title. Since my last post I've been busy with all kinds of different things; my game projects have all crawled along in the background making s-l-o-w progress. More significantly, I seem to have found myself becoming an evangelist-

cum-apologist for

Virtual Reality technology.

It's a bit of a weird situation. The

major commercial VR products are just about to hit the market. There's been a flurry of activity this last year. Early adopters are poised to pounce. And yet there's also a resigned feeling that the whole thing will be a five-minute wonder. I've heard the phrase "the next 3D TV" so many times that I'm thinking of getting a T-shirt made.

So, is VR going to take over the world? Or will it be just a toy for the kids? (Hey, I have to justify the title somehow!)

View-Master

The

Mattel View-Master VR (which I blogged-about back in

February) has finally hit the streets ready for the Christmas market. You can already buy it from

Amazon and

Walmart (but, sadly, not in the UK yet ... and the rotters won't ship internationally).

It looks pretty good. A $30 (that's £20 sterling to you, guvnor) you get a plastic

Google Cardboard. One that won't fall apart or crumple when it gets sat on.

|

| Mattel's GIF animation. Don't blame me if it doesn't work on your screen! |

I can't wait to get one. Except I know what will happen: I'll look through the eyepieces and realise that I won't be able to use it.

The Problem of Interpupilary Distance

The problem is a simple one. My eyes are too far apart. (Yes, I've literally got a big head.)

One of the reasons that binoculars have a big hinge in the middle is because everybody's eyes are a different distance apart. This

interpupilary distance (IPD) creates a problem for VR systems, because they need to focus each eye on half of a stereo image -- and that's difficult when IPD varies so much.

The graph above shows that 95% of people sampled fell within the 55-70mm range. Therefore a number of VR manufacturers tend to work on the basis that:

- A fixed IPD of 63mm to 65mm will be comfortable for most of the population.

- Fixed lens position is better than variable, because people will set a variable one wrongly anyway. (That's Firefly VR's position, anyway.)

Google's own

Cardboard specification originally presumed a fixed 65mm IPD, but that's complicated by the range of

different phone screen sizes, which doubles the problem up.

My own 70mm IPD and big screen phone were enough of a problem that I needed to device my own

scaled-up Cardboard template.

Oculus Rift & chromatic aberration

I picked up an

Oculus Rift Development Kit (DK2 version) back in the summer and have been playing with adapting existing VR environments for it.

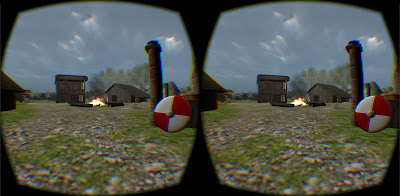

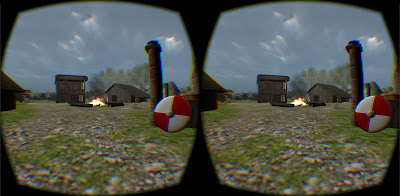

|

| A VR-adapted version of The Lone Viking, a game (by my former students Grant & Chris) for the Unity game engine. |

The DK2 tries to get around the IPD problem by adjusting the display output to the user, attempting to compensate for both eye position and focusing issues. As you can see in the photo below, the kit comes with hefty lenses, switchable with near-sighted versions.

The lenses have a high magnification, which increases an effect called

chromatic aberration. This effect is demonstrated on the following image (courtesy of

mabrowning)

Essentially, the shape of the lens distorts the light coming from individual

sub-pixels on the display screen. If your eye is off-centre of the lens then the colours won't position properly -- known as the "

screen door" effect. There's a nice article about the issue at

VR-tifacts.

This is a big nightmare for fixed IPD devices. Thankfully, it appears that Oculus have taken the plunge and

the consumer version of the Rift will have a manual IPD adjustment slider.

Even with this, the issue of aberration is not completely diminished. It's hard to stop the problem at the extremes of view, so you'll notice that the screenshot above shows some colour fringing where the software attempts to compensate for the problem.

Conclusions

Of course, the big advantage of the Oculus Rift is that you can move your head freely (although there's still a small amount of motion sickness on the DK2). However, I was shocked to find that the image on my £10 made-at-home Google Cardboard felt better than the blurry one on £300 DK2.

This is likely a result of my big head -- sorry, larger-than-average IPD -- and I'm sure that a carfeully-adjusted consumer Oculus Rift will not have that issue.

And what are we going to use VR for anyway? Gaming is seen as the

killer app but I doubt companies like Facebook would

invest $2 billion into buying Oculus on that basis alone. At work we're already talking realistically about using it for training & education: from welding simulators to biology.

However, I can't help but think that the biggest quantity of sales for VR this next 12 months will instead be the toy market. I think Google and Mattel have hit the jackpot in combining nostalgia with high-tech, and those of us with bigger-than-average heads will undoubtedly start cannibalising our cheap VR headsets to join in.