A couple of years ago I was impressed by the release of Gone Home, an indie game set in 1995 with heavy use of applied nostalgia triggers. The design included general period features and some beautiful detailing to evoke a strong emotional reaction as the player explored an abandoned home.

As someone with a strong interest in this area I was pleased to see nostalgic content appearing sporadically in other games (such as The Witness) but few were as steeped in it as Gone Home. That is, until a few months ago when a new game appeared...

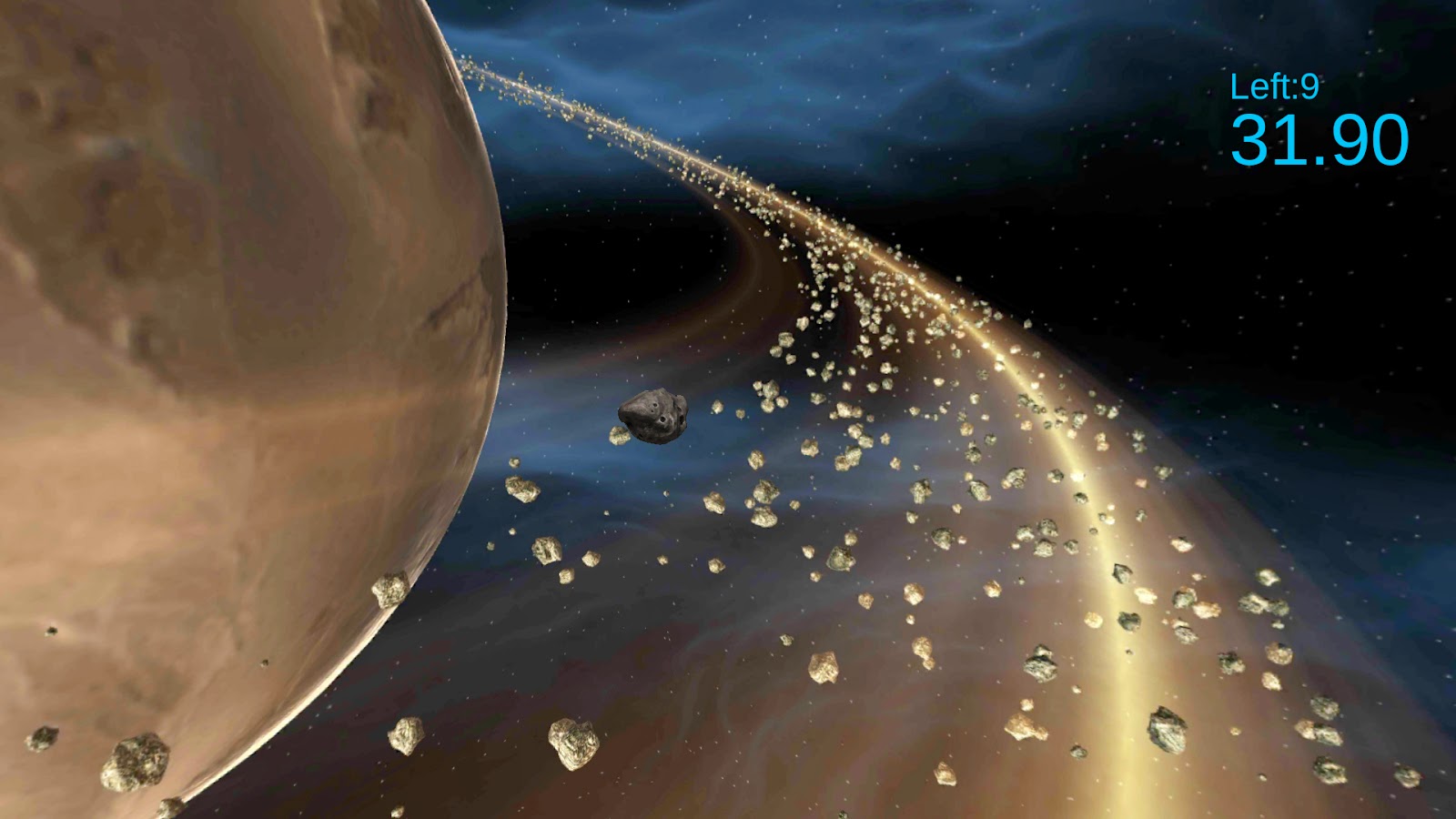

Everybody's Gone To The Rapture

I'd seen articles about this game online and investigated it a bit over the Summer; however, I finally got to see it properly in action at the EGX Eurogamer Expo in Birmingham. And I was blown away.

First thing: this game is jaw-droppingly beautiful. The Chinese Room have done an astonishing job on this aesthetically.

The game is full of nostalgia-inducing objects, including 1980s-period Ford Fiesta (and Cortina) cars and an old Commodore 64 style computer (with floppy disk drive and box-style CRT monitor).

There's even a metal push-button payphone (model CT24 if you're feeling particularly geeky about phones), which harks back to my post on the potential of telephones as nostalgia triggers.

The whole thing evokes the feeling of the "deserted village" episodes of 1960s/70s TV shows like The Avengers, UFO, or Doctor Who; period bicycles, a 1970s John Deere tractor, a 1960s-style Transit van ... the level of detail is astonishing.

Mind you, this isn't surprising -- the developers went to a lot of effort to recreate that tone:

"For Everybody's Gone to the Rapture, we really wanted to explore the very British apocalyptic sci-fi of the 60s and 70s," says creative direct Dan Pinchbeck. "John Christopher's The Death of Grass and A Wrinkle in the Skin, John Wyndham's Day of the Triffids and some of the really early 'cosy catastrophe' fiction like The Tide Went Out by Robert Wade."While researching, I came across the website of Mark Silvester, an environment artist who recently graduated from the University of Portsmouth and was asked by The Chinese Room to do some asset work. Nice to see novice designers pulled into this world. Here's his (original 1979-style) Walkman:

— "Where literature & gaming collide" by Thomas McMullan, Eurogamer

This game really is quite a visual feast and, if you get the chance to watch any gameplay, do. The PS4's power gives life to gorgeous metallic railings, glistening in the sunlight.

I felt like I was swept back to the heady halcyon summer days of the 1970s, and that's a great confirmation of the power of nostalgia applied in video games.